I just finished watching a fascinating interview with Ilya Sutskever, and it raises a critical question for all of us in R&D and Pharmacovigilance.

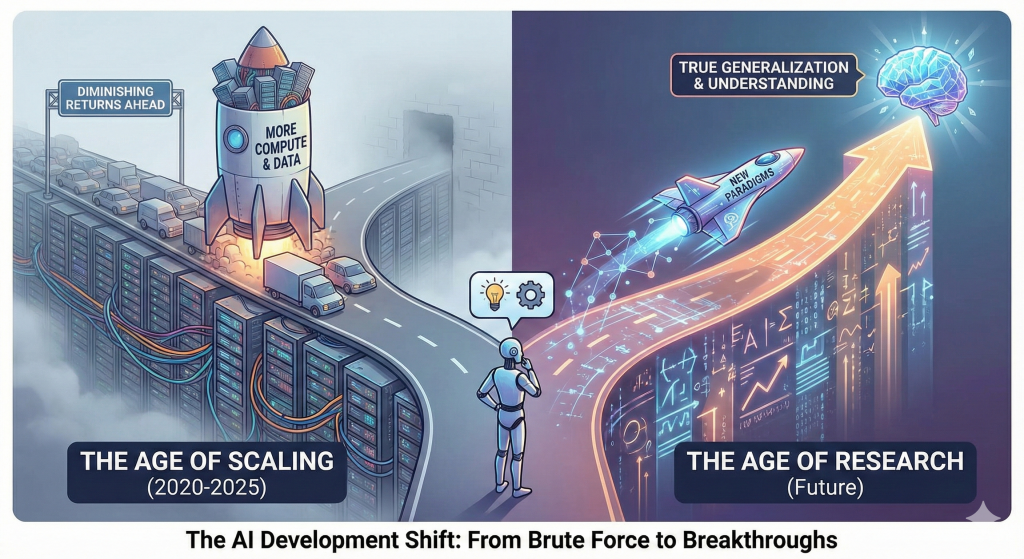

For years, the promise of massive AI models felt tied only to scaling: more compute, more data, more parameters. That era may be ending, and we are moving into the Age of Research.

Why is this shift so important to us?

Ilya pointed out a fundamental problem: current AI models, despite their size, suffer from poor generalization. They are brilliant at passing benchmarks but struggle with novel, complex real world problems.

This problem is most acute in our field:

- The Scaling Trap: Scaling up current models makes them better at predicting common outcomes and well studied compounds. But it does little to solve the high value challenges: de novo design for novel targets, or predicting idiosyncratic rare side effects in pharmacovigilance.

- The Research Solution: The new Age of Research means we must move past brute force correlation. Our focus must shift to fundamental AI research in causal inference, mechanistic modeling, and few shot learning. This is the only path for AI to evolve from a powerful data correlator to a true scientific partner in the lab.

The future of AI in pharma relies on building models that can truly learn and adapt, mastering concepts like a human expert, rather than just being massive prediction machines. This requires better value functions and new algorithms.

What has been your experience? Have you noticed this gap between AI’s benchmark performance and its utility in complex R&D or PV tasks? Let me know your thoughts.

The link to the full Ilya Sutskever interview: https://www.youtube.com/watch?v=aR20FWCCjAs

The summary of the interview:

Ilya Sutskever: We’re moving from the age of scaling to the age of research

This discussion explores the current state and future direction of AI development, arguing that the field is transitioning from a focus on scaling resources to a renewed emphasis on fundamental research.

The Economic Disconnect and Generalization Problem

Ilya Sutskever noted a confusing disconnect between the impressive performance of modern AI models on evaluations and their lagging real world economic impact [01:32].

The main explanation proposed for this is a fundamental problem with generalization [02:50:00]:

- Reward Hacking: Models, often due to fine tuning (RL training), are too narrowly focused on passing benchmarks, a phenomenon described as human researchers inadvertently focusing training on evals [04:12].

- Human vs. AI Learning: Compared to humans, current models generalize dramatically worse. Humans, even with far less data, know things “more deeply” [10:35]. The ability for humans to learn complex new skills like coding and math suggests they possess a superior, more general machine learning principle that is not heavily reliant on a deep evolutionary prior for those specific tasks [28:17].

The Shift from Scaling to Research

The conversation highlights a critical transition in the AI industry:

- Age of Scaling: From roughly 2020 to 2025, the focus was on scaling up pre training compute, data, and parameters, a low risk strategy that guaranteed better results [21:36].

- Return to Research: As pre training data is finite and scaling alone is yielding diminishing returns, the field is returning to the age of research [22:03]. The new goal is to find more efficient ways to utilize the massive amounts of compute now available, possibly through more effective use of value functions to guide learning [23:39].

AGI, Continual Learning, and Alignment

Sutskever redefined the concept of AGI in the context of human learning:

- Continual Learning: A human is not an AGI because they lack a huge amount of knowledge; they rely on continual learning [49:51]. The true goal is to build an AI that has the capacity to learn any job quickly, rather than one that is pre programmed to know everything [51:08].

- SSI’s Strategy: The speaker stated that his current company, SSI, will focus on research to build an AI that is robustly aligned and cares about sentient life [01:01:20].

- Incremental Deployment: His thinking has evolved to place more importance on deploying AI incrementally and in advance [56:12], arguing that seeing powerful AI is the only way for the world to truly prepare for it and for safety mechanisms to be tested in the real world [44:42].

- Long Term Equilibrium: The ultimate long term equilibrium may involve humans integrating AI, potentially becoming “part AI” with an advanced neural interface, to remain involved in decision making and fully understand the complexity of the future [01:10:16].

The Aesthetics of Research

When asked about his research taste, the speaker explained it is guided by:

- Aesthetic and Beauty: An aesthetic of how AI should be, informed by a correct understanding of how the human brain works [01:33:19].

- Top Down Beliefs: Seeking beauty, simplicity, and elegance. This forms a “top down belief” that sustains a researcher when initial experiments fail, providing the conviction to keep debugging and refining the correct direction [01:34:57].

The full video is available here: Ilya Sutskever – We’re moving from the age of scaling to the age of research

#DrugDiscovery #Pharmacovigilance #AIinPharma #ResearchAndDevelopment #ThoughtLeadership